Biography

Xiaoxiang Han is a Ph.D. student at the SMART Lab, School of Communication and Information Engineering, Shanghai University, Shanghai, China, supervised by Prof. Qi Zhang. He is dedicated to closely integrating artificial intelligence with healthcare. His main research interests include pattern recognition, medical image/video analysis, weakly/semi/self-supervised learning, and trustworthy AI.

- Medical Image/Video Analysis

- Weakly/Semi/Self-Supervised Learning

- Computer Vision and Pattern Recognition

- Trustworthy AI

-

PhD in Information and Communication Engineering

Shanghai University

-

MEng in Electronic Information, 2024

University of Shanghai for Science and Technology

-

BEng in Computer Science and Technology, 2021

Jinling Institute of Technology

Featured Papers

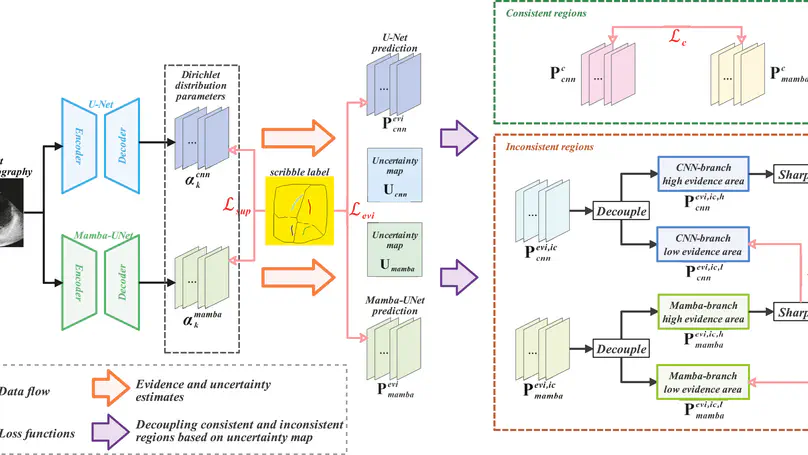

Segmenting anatomical structures and lesions from ultrasound images contributes to disease assessment, diagnosis, and treatment. Weakly supervised learning (WSL) based on sparse annotation has demonstrated the potential to reduce annotation costs. This study attempts to introduce scribble-based WSL into ultrasound image segmentation tasks. However, ultrasound images often suffer from poor contrast and unclear edges, coupled with insufficient supervision for edges, posing challenges to edge prediction. Uncertainty modeling has been proven to facilitate models in handling these issues. Nevertheless, existing uncertainty estimation paradigms lack robustness and often filter out predictions near decision boundaries, resulting in unstable edge predictions. Therefore, we propose leveraging predictions near decision boundaries effectively. Specifically, we introduce Dempster-Shafer Theory (DST) of evidence to design an Evidence-Guided Consistency (EGC) strategy. This strategy utilizes high-evidence predictions, which are more likely to occur near high-density regions, to guide the optimization of low-evidence predictions that may appear near decision boundaries. Furthermore, the varying sizes and locations of lesions in ultrasound images challenge CNNs with local receptive fields, hindering global information modeling. Therefore, we introduce Visual Mamba, a structured state space model, for long-range dependency with linear complexity, and propose a hybrid CNN-Mamba framework for both local and global information fusion. During training, the collaboration between the CNN branch and the Mamba branch draws inspiration from each other based on the EGC strategy. Extensive experiments on four ultrasound public datasets for binary-class and multi-class segmentation demonstrate the competitiveness of the proposed method. The scribble-annotated dataset and code will be made available on https://github.com/GtLinyer/MambaEviScrib.

Papers

Popular Topics

Contact

- hanxx@shu.edu.cn

- No. 99 Shangda Road, Baoshan District, Shanghai, 200444